We don’t Need no Stinkin’ Dark Matter

Extra Acceleration

You’ve heard of dark matter, right? Some sort of exotic particle that lurks in the outskirts of galaxies.

Maybe you know the story of elusive dark matter. The first apparent home for dark matter was in clusters of galaxies, as Fritz Zwicky postulated for the Coma Cluster in the 1930s, due to the excessive galaxy random motions that he measured.

There have been eight decades of discovery and measurement of the gravitational anomalies that dark matter is said to cause, and eight decades of notable failure to directly find any very faint ordinary matter, black holes, or exotic particle matter in sufficient quantities to explain the magnitude of the observed anomalies.

If dark matter is actually real and composed of particles or primordial black holes then there is five times as much mass per unit volume on average in that form as there is in the form of ordinary matter. Ordinary matter is principally in the form of protons and neutrons, primarily as hydrogen and helium atoms and ions.

Why do we call it dark? It gives off no light. Ordinary matter gives off light, it radiates. What else gives off no light? A gravitational field stronger than predicted by existing laws.

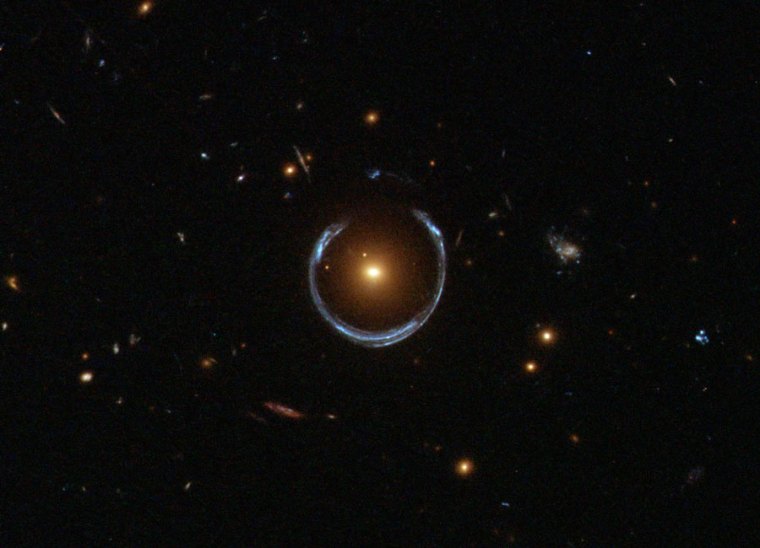

Gravitational anomalies are seen in the outer regions of galaxies by examining galaxy rotation curves, which flatten out unexpectedly with distance from the galactic center. They are seen in galaxy groups and clusters from measuring galaxy velocity dispersions, from X-ray observations of intracluster gas, and from gravitational lensing measurements. A dark matter component is also deduced at the cosmic scale from the power spectrum of the cosmic microwave background spatial variations.

The excessive velocities due to extra acceleration are either caused by dark matter or by some departure of gravity over and above the predictions of general relativity.

Actually at high accelerations general relativity is the required model but at low accelerations Newtonian dynamics is an accurate approximation. The discrepancies arise only at very low accelerations. These excess velocities, X-ray emission, and lensing are observed only at very low accelerations, so we are basically talking about an alternative of extra gravity which is over and above the 1/r² law for Newtonian dynamics.

Alternatives to General Relativity and Newtonian Dynamics

There are multiple proposed laws for modifying gravity at very low accelerations. To match observations the effect should start to kick in for accelerations less than c * H, where H is the Hubble expansion parameter and its inverse is nearly equal to the present age of the universe.

That is only around 1 part in 14 million expressed in units of centimeters per second per second. This is not something typically measurable in Earth-bound laboratories; scientists have trouble pinning down the value of the gravitational constant G to within 1 part in 10,000.

This is a rather profound coincidence, suggesting that there is something fundamental at play in the nature of gravity itself, not necessarily a rather arbitrary creation of exotic dark matter particle in the very early universe. It suggests instead that there is an additional component of gravity tied in some way to the age and state of our universe.

Do you think of general relativity as the last word on gravity? From an Occam’s razor point of view it is actually simpler to think about modifying the laws of gravity in very low acceleration environments, than to postulate an exotic never-seen-in-the-lab dark matter particle. And we already know that general relativity is incomplete, since it is not a quantum theory.

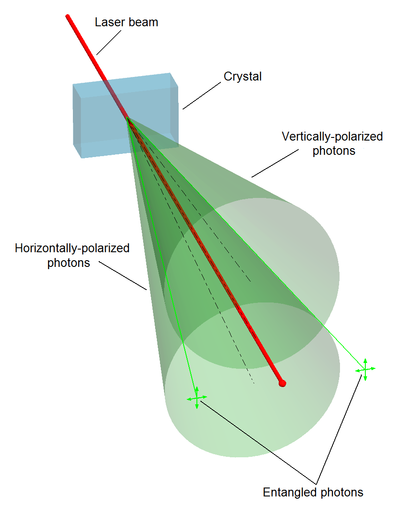

The emergent gravity concept neatly solves the quantum issue by saying gravity is not fundamental in the way that electromagnetism and the nuclear forces are. Rather it is described as an emergent property of a system due to quantum entanglement of fields and particles. In this view, the fabric of space also arises from this entanglement. Gravity is a statistical property of the system, the entropy (in thermodynamic terms) of entanglement at each point.

Dark Energy

Now we have a necessary aside on dark energy. Do you know that dark energy is on firmer standing now than dark matter? And do you know that dark energy is just described by additional energy and pressure components in the stress-energy tensor, fully described within general relativity?

We know that dark energy dominates over dark matter in the canonical cosmological model (Lambda-Cold Dark Matter) for the universe. The canonical model has about 2/3 dark energy and the solution for the universe’s expansion approximates a de Sitter model in general relativity with an exponential ‘runaway’ expansion.

Dark Gravity

As we discuss this no dark matter alternative, we refer to it as dark gravity, or dark acceleration. Regardless of the nature of dark matter and dark gravity, the combination of ordinary gravity and dark gravity is still insufficient to halt the expansion of the universe. In this view, the dark gravity is due to ordinary matter, there is just more of it (gravity) than we expect, again only for the very low c * H or lower acceleration environments.

Some of the proposed laws for modified gravity are:

- MOND – Modified Newtonian Dynamics, from Milgrom

- Emergent gravity, from Verlinde

- Metric skew tensor gravity (MSTG), from Moffat (and also the more recent variant scalar-tensor-vector gravity (STVG), sometimes called MOG (Modified gravity)

Think of the dark gravity as an additional term in the equations, beyond the gravity we are familiar with. Each of the models adds an additional term to Newtonian gravity, that only becomes significant for accelerations less than c*H. The details vary between the proposed alternatives. All do a good job of matching galaxy rotation curves for spiral galaxies and the Tully-Fisher relation that can be used for analyzing elliptical galaxies.

Things are trickier in clusters of galaxies, which are observed for galaxy velocity dispersions, X-ray emission of intracluster gas, and gravitational lensing. The MOND model appears to come up short by a factor of about two in explaining the total dark gravity implied.

Emergent gravity and modified gravity theories including MSTG claim to be able to match the observations in clusters.

Clusters of Galaxies

Most galaxies are found in groups and clusters.

Clusters and groups form from the collapse of overdense regions of hydrogen and helium gas in the early universe. Collapsing under its own gravity, such a region will heat up via frictional processes and cooler sub-regions will collapse further to form galaxies within the cluster.

Rich clusters have hundreds, even thousands of galaxies, and their gravitational potential is so high that the gas is heated to millions of degrees via friction and shock waves and gives off X-rays. The X-ray emission from clusters has been actively studied since the 1970s, via satellite experiments.

What is found is that most matter is in the form of intracluster gas, not galaxies. Some of this is left over primordial gas that never formed galaxies and some is gas that was once in a galaxy but expelled via energetic processes, especially supernovae.

Observations indicate that around 90% of (ordinary) matter is in the form of intracluster gas, and only around 10% within the galaxies in the form of stars or interstellar gas and dust. Thus modeling the mass profile of a cluster is best done by looking at how the X-ray emission falls off as one moves away from the center of a cluster.

In their 2005 paper, Brownstein and Moffat compiled X-ray emission profiles and fit gas mass profiles with radius and temperature profiles for 106 galaxy clusters. They aggregated data from a sample of 106 clusters and find that an MSTG model can reproduce the X-ray emission with a mass profile that does not require dark matter.

The figure below shows the average profile of cumulative mass interior to a given radius. The mass is in units of solar masses and runs into the hundreds of trillions. The average radius extends to over 1000 Kiloparsecs or over 1 Megaparsec (a parsec is 3.26 light-years).

The bottom line is that emergent gravity and MSTG both claim to have explanatory power without any dark matter for observations of galaxy rotation curves, gravitation lensing in clusters (Brower et al. 2016), and cluster mass profiles deduced from the X-ray emission from hot gas.

Figure 2 from J.R. Brownstein⋆ and J.W. Moffat (2005), “Galaxy Cluster Masses without Non-Baryonic Dark Matter”. Shown is cumulative mass required as a function of radius. The red curve is the average of X-ray observations from a sample of 106 clusters. The black curve is the authors’ model assuming MSTG, a good match. The cyan curve is the MOND model, the blue curve is a Newtonian model, and both require dark matter. The point is that the authors can match observations with much less matter and there is no need to postulate additional exotic dark matter.

What we would very much like to see is a better explanation of the cosmic microwave background density perturbation spectrum for the cosmic scale, for either of these dark gravity models. The STVG variant of MSTG claims to address those observations as well, without the need for dark matter.

In future posts we may look at that issue and also the so called ‘silver bullet’ that dark matter proponents often promote, the Bullet Cluster, that consists of two galaxy clusters colliding and a claimed separation of dark matter and gas.

References

Brower, M. et al. 2016, “First test of Verlinde’s theory of Emergent Gravity using Weak Gravitational Lensing Measurements” https://arxiv.org/abs/1612.03034v2

Brownstein, J. and Moffat, J. 2005, “Galaxy Cluster Masses without Non-baryonic Dark Matter”, https://arxiv.org/abs/astro-ph/0507222

Perrenod, S. 1977 “The Evolution of Cluster X-ray Sources” http://adsabs.harvard.edu/abs/1978ApJ…226..566P, thesis.

https://darkmatterdarkenergy.com/2018/09/19/matter-and-energy-tell-spacetime-how-to-be-dark-gravity/

https://darkmatterdarkenergy.com/2016/12/30/emergent-gravity-verlindes-proposal/

https://darkmatterdarkenergy.com/2016/12/09/modified-newtonian-dynamics-is-there-something-to-it/

If space is defined by the connectivity of quantum entangled particles, then it becomes almost natural to consider gravity as an emergent statistical attribute of the spacetime. After all, we learned from general relativity that “matter tells space how to curve, curved space tells matter how to move” – John Wheeler.

If space is defined by the connectivity of quantum entangled particles, then it becomes almost natural to consider gravity as an emergent statistical attribute of the spacetime. After all, we learned from general relativity that “matter tells space how to curve, curved space tells matter how to move” – John Wheeler.