Dark Stars is the name given to hypothetical stars in the early universe that were overwhelmingly composed of ordinary matter (baryons [protons and neutrons] and electrons) but that also were ‘salted’ with a little bit of dark matter.

And stars in this category have not evolved to the point of achieving stellar nucleosynthesis in their cores, instead they are, again hypothetically, heated by the dark matter within.

Professor Katherine Freese of the University of Texas physics department (previously at U. Michigan) and others have been suggesting the possibility of dark stars for well over a decade, see “The Effect of Dark Matter on the First Stars: A New Phase of Stellar Evolution”.

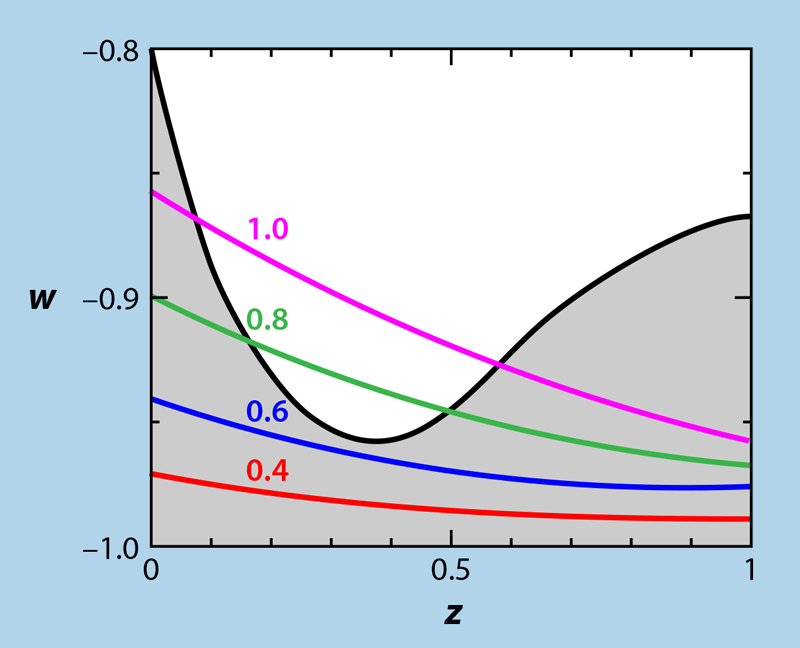

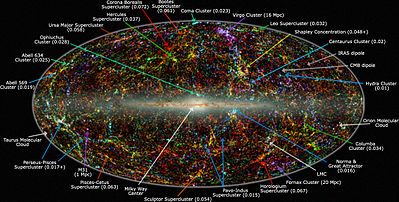

In a paper from last year “Dark stars powered by self-interacting dark matter” authors Wu, Baum, Freese, Visinelli, and Yu propose a type of self-interacting dark matter (SIDM). The authors start with the consideration of overdense regions known as ‘halos’ at the epoch of 200 million years for the universe’s age, corresponding to redshift z ~ 20. These are expected due to gravitational instability of slightly overdense regions that we see in the cosmic microwave background maps, from an epoch of only 0.38 million years.

One starts out with these dark matter dominated halos, but the ordinary matter within is much more efficient at collapse since it can radiate energy away electromagnetically. Dark matter, by definition, does not interact electromagnetically, that is why we don’t see it except through its gravitational effects or if it were to decay into normal matter. That also minimizes its ability to cool and collapse, other than through decay processes.

As the normal matter radiates, cools, and collapses further it concentrates into the center, away from the dark matter halo overall, but would include some modest amount of dark matter. A dark star might be fueled by only 0.1% dark vs. ordinary matter.

New Particles χ, φ

This SIDM scenario requires two new particles that the authors refer to as χ and φ. The χ dark matter particle (a fermion) could have a mass of order 100 GeV, similar to that of the Higgs particle (but that is a boson, not a fermion), and some 100 times that of a proton or neutron.

The φ particle (a scalar field) is a low-mass mediator in the dark sector with a mass closer to that of an electron or muon (heavy electron), in the range of 1 to 100 MeV. This particle would mediate between the χ and ordinary matter, baryons and electrons.

The main mechanism for heating is the decay of the charged χ and its anti-particle into pairs of (neutral) φ particles that can then go on to decay to electron / positron pairs. These easily thermalize within the ionized hydrogen and helium gas cloud as the electrons and positrons annihilate to gamma rays when they meet their corresponding anti-particle.

The decay mean free path for the φ would need to be of order 1 AU or less (an astronomical unit, the Earth-Sun distance); in this way, the decays would deposit heat into the protostellar cloud. The clouds can heat up to thousands of degrees Kelvin such that they are very efficient radiators. And since they can be large, larger than 1 AU their luminosity can also be very large.

A Model Dark Star

In their paper, Wu and co-authors model a 10 solar mass dark star with a photosphere of 3.2 AU radius. Such a dark star if placed at the Sun’s location would have its photosphere beyond the orbits of Earth and Mars and reaching to the outer edge of the asteroid belt.

The temperature of their model star is 4300 Kelvin, or 3/4 that of the Sun. Despite the lower temperature, because of the large size, the total luminosity in this case is 150,000 times that of our Sun. It would be reddish in color at the source, but highly redshifted into the infrared light would reach us. This luminosity is 100 times more luminous than the most luminous red supergiant star, but not much brighter intrinsically than the (much hotter) blue supergiant Rigel.

Because such a star would be a first stellar generation, it would have no spectral lines from any elements other than hydrogen or helium. Only hydrogen and helium and a trace amount of lithium are formed in the Big Bang.

Black holes as a result?

Other dark stars might have temperatures of 10,000 Kelvin, and possibly accrete matter from the aforementioned halo until they reach luminosities as large as 10 million times that of the Sun. These might be visible with the James Webb Space Telescope (JWST).

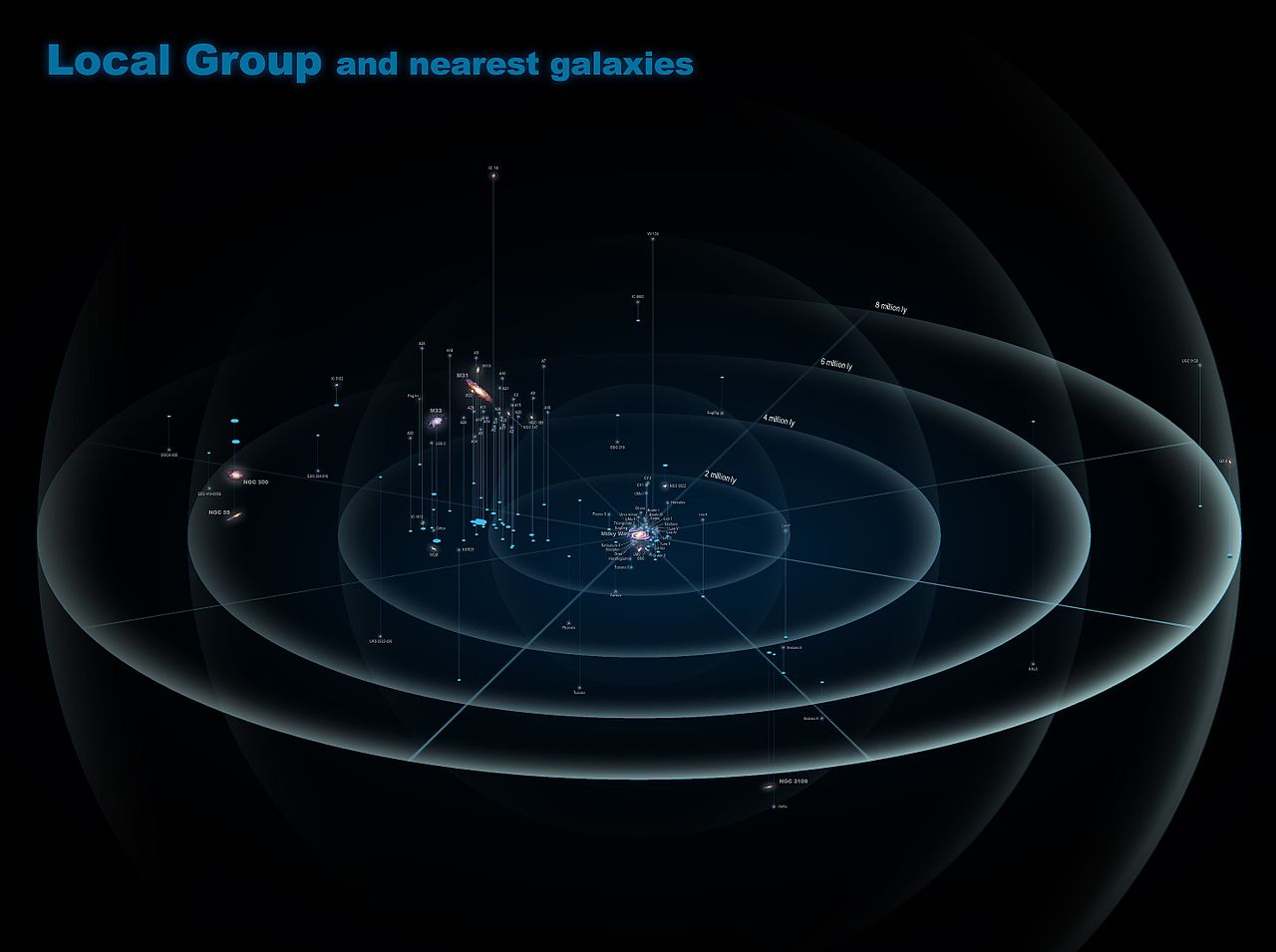

Dark stars might last as long as half a billion years, or the annihilation might shut down sooner due to the collapse of ordinary matter in the protostellar envelope. Once the dark star phase ends, there could be a rapid collapse to high mass nucleosynthesis-powered stars that would end their short lives as black holes, or one could even have direct collapse to black holes of high masses. These would be interesting candidates as the seeds of the supermassive black holes of millions and billions of solar masses that we observe today in the centers of galaxies, both nearby and at high redshifts.

The James Webb Space Telescope is finding more early galaxies than expected within the first 500 million years of the universe. It is able to peer back that far because of its instruments’ sensitivity in the infrared portion of the spectrum. The light from such early galaxies is heavily shifted from optical to infrared frequencies, by order a factor of 10, due to the universe’s expansion over the past 13 billion plus years. Perhaps seeing more early-onset galaxies is in part because of the role that dark stars play in hastening the evolution of the stellar population.

We look forward to JWST detecting the earliest stars, that might be dark stars, or providing constraints on their visibility or viability.

– Stephen Perrenod, Ph.D., September 2023