This is the first in a series of articles on ‘dark gravity’ that look at emergent gravity and modifications to general relativity. In my book Dark Matter, Dark Energy, Dark Gravity I explained that I had picked Dark Gravity to be part of the title because of the serious limitations in our understanding of gravity. It is not like the other 3 forces; we have no well accepted quantum description of gravity. And it is some 33 orders of magnitude weaker than those other forces.

I noted that:

The big question here is ~ why is gravity so relatively weak, as compared to the other 3 forces of nature? These 3 forces are the electromagnetic force, the strong nuclear force, and the weak nuclear force. Gravity is different ~ it has a dark or hidden side. It may very well operate in extra dimensions… http://amzn.to/2gKwErb

My major regret with the book is that I was not aware of, and did not include a summary of, Erik Verlinde’s work on emergent gravity. In emergent gravity, gravity is not one of the fundamental forces at all.

Erik Verlinde is a leading string theorist in the Netherlands who in 2009 proposed that gravity is an emergent phenomenon, resulting from the thermodynamic entropy of the microstates of quantum fields.

In 2009, Verlinde showed that the laws of gravity may be derived by assuming a form of the holographic principle and the laws of thermodynamics. This may imply that gravity is not a true fundamental force of nature (like e.g. electromagnetism), but instead is a consequence of the universe striving to maximize entropy. – Wikipedia article “Erik Verlinde”

This year, Verlinde extended this work from an unrealistic anti-de Sitter model of the universe to a more realistic de Sitter model. Our runaway universe is approaching a dark energy dominated deSitter solution.

He proposes that general relativity is modified at large scales in a way that mimics the phenomena that have generally been attributed to dark matter. This is in line with MOND, or Modified Newtonian Dynamics. MOND is a long standing proposal from Mordehai Milgrom, who argues that there is no dark matter, rather that gravity is stronger at large distances than predicted by general relativity and Newton’s laws.

In a recent article on cosmology and the nature of gravity Dr.Thanu Padmanabhan lays out 6 issues with the canonical Lambda-CDM cosmology based on general relativity and a homogeneous, isotropic, expanding universe. Observations are highly supportive of such a canonical model, with a very early inflation phase and with 1/3 of the mass-energy content in dark energy and 2/3 in matter, mostly dark matter.

And yet,

1. The equation of state (pressure vs. density) of the early universe is indeterminate in principle, as well as in practice.

2. The history of the universe can be modeled based on just 3 energy density parameters: i) density during inflation, ii) density at radiation – matter equilibrium, and iii) dark energy density at late epochs. Both the first and last are dark energy driven inflationary de Sitter solutions, apparently unconnected, and one very rapid, and one very long lived. (No mention of dark matter density here).

3. One can construct a formula for the information content at the cosmic horizon from these 3 densities, and the value works out to be 4π to high accuracy.

4. There is an absolute reference frame, for which the cosmic microwave background is isotropic. There is an absolute reference scale for time, given by the temperature of the cosmic microwave background.

5. There is an arrow of time, indicated by the expansion of the universe and by the cooling of the cosmic microwave background.

6. The universe has, rather uniquely for physical systems, made a transition from quantum behavior to classical behavior.

“The evolution of spacetime itself can be described in a purely thermodynamic language in terms of suitably defined degrees of freedom in the bulk and boundary of a 3-volume.”

Now in fluid mechanics one observes:

“First, if we probe the fluid at scales comparable to the mean free path, you need to take into account the discreteness of molecules etc., and the fluid description breaks down. Second, a fluid simply might not have reached local thermodynamic equilibrium at the scales (which can be large compared to the mean free path) we are interested in.”

Now it is well known that general relativity as a classical theory must break down at very small scales (very high energies). But also with such a thermodynamic view of spacetime and gravity, one must consider the possibility that the universe has not reached a statistical equilibrium at the largest scales.

One could have reached equilibrium at macroscopic scales much less than the Hubble distance scale c/H (14 billion light-years, H is the Hubble parameter) but not yet reached it at the Hubble scale. In such a case the standard equations of gravity (general relativity) would apply only for the equilibrium region and for accelerations greater than the characteristic Hubble acceleration scale of (2 centimeters per second / year).

This lack of statistical equilibrium implies the universe could behave similarly to non-equilibrium thermodynamics behavior observed in the laboratory.

The information content of the expanding universe reflects that of the quantum state before inflation, and this result is 4π in natural units by information theoretic arguments similar to those used to derive the entropy of a black hole.

The black hole entropy is where A is the area of the black hole using the Schwarzschild radius formula and Lp is the Planck length,

, where G is the gravitational constant,

is Planck’s constant.

This beautiful Bekenstein-Hawking entropy formula connects thermodynamics, the quantum world and gravity.

This same value of the universe’s entropy can also be used to determine the number of e-foldings during inflation to be 6 π² or 59, consistent with the minimum value to enforce a sufficiently homogeneous universe at the epoch of the cosmic microwave background.

If inflation occurs at a reasonable ~ GeV, one can derive the observed value of the cosmological constant (dark energy) from the information content value as well, argues Dr. Padmanhaban.

This provides a connection between the two dark energy driven de Sitter phases, inflation and the present day runaway universe.

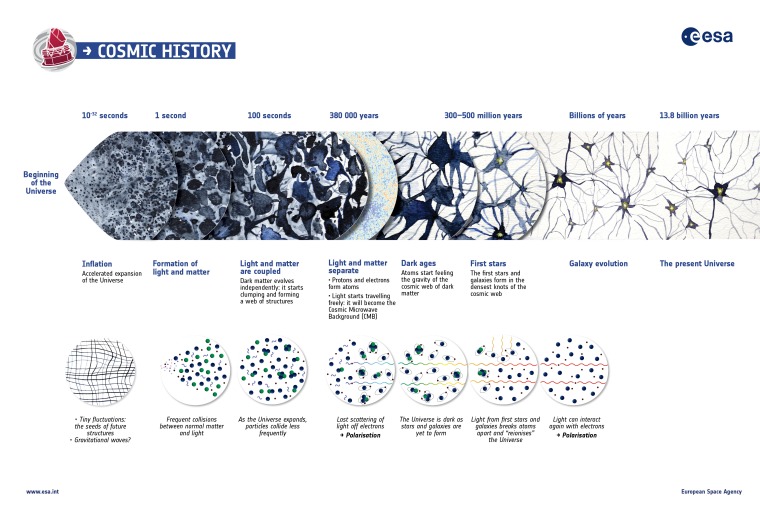

The table below summarizes the 4 major phases of the universe’s history, including the matter dominated phase, which may or may not have included dark matter. Erik Verlinde in his new work, and Milgrom for over 3 decades, question the need for dark matter.

Epoch / Dominated / Ends at / a-t scaling / Size at end

Inflation / Inflaton (dark energy) / seconds /

(de Sitter) / 10 cm

Radiation / Radiation / 40,000 years / / 10 million light-years

Matter / Matter (baryons) Dark matter? / 9 billion light-years / / > 100 billion light-years

Runaway / Dark energy (Cosmological constant) / “Infinity” / (de Sitter) / “Infinite”

In the next article I will review the status of MOND – Modified Newtonian Dynamics, from the phenomenology and observational evidence.

References

E. Verlinde. “On the Origin of Gravity and the Laws of Newton”. JHEP. 2011 (04): 29 http://arXiv.org/abs/1001.0785

T. Padmanabhan, 2016. “Do We Really Understand the Cosmos?” http://arxiv.org/abs/1611.03505v1

S. Perrenod, 2011. https://darkmatterdarkenergy.com/2011/07/04/dark-energy-drives-a-runaway-universe/

S. Perrenod, 2011. Dark Matter, Dark Energy, Dark Gravity 2011 http://amzn.to/2gKwErb

S. Carroll and G. Remmen, 2016, http://www.preposterousuniverse.com/blog/2016/02/08/guest-post-grant-remmen-on-entropic-gravity/